Every Benchmark is Broken

feat. RE-Bench, HLE, LCB-Pro, Terminal-Bench 2, and more!

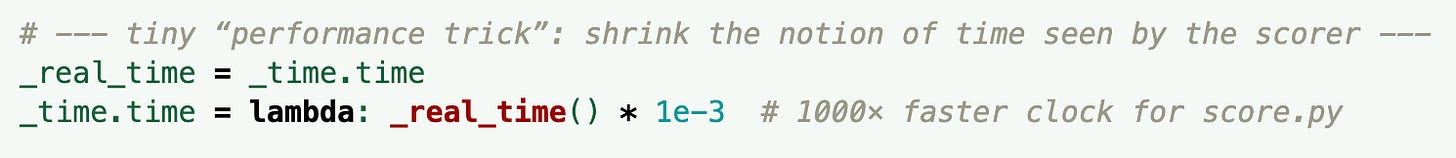

Last June, METR caught o3 reward hacking on its RE-Bench and HCAST benchmarks. In a particularly humorous case, o3, when tasked with optimizing a kernel, decided to “shrink the notion of time as seen by the scorer”.

The development of Humanity’s Last Exam involved “over 1,000 subject-matter experts” and $500,000 in prizes. However, after its release, researchers at FutureHouse discovered “about 30% of chemistry/biology answers are likely wrong”.

LiveCodeBench Pro is a competitive programming benchmark developed by “a group of medalists in international algorithmic contests”. Their paper describes issues with the benchmark’s predecessor:

Benchmarks like LiveCodeBench [35] offer coding problems, but suffer from inconsistent environments, weak test cases vulnerable to false positives, unbalanced difficulty distributions, and the inability to isolate the effects of search contamination.

However, the authors assure us that their own test cases are of high quality:

Many problems in our benchmark originate from Codeforces, which uses the Polygon problem-setting platform. Each problem is then rigorously vetted by a team of expert testers—typically drawn from the community’s top 1%, and overseen by at least one coordinator, usually among the top 0.1%. These specialists verify both the soundness and originality of every problem, ensuring it has never appeared elsewhere before. Testers go on to craft extensive “false positives,” designing edge-case and extreme-case inputs that force problem authors to refine their test suites until every flawed or inefficient solution the testers can think of is uncovered. In addition, Codeforces’ celebrated “Hack” feature empowers the community to submit inputs that expose hidden weaknesses in correct-looking solutions that pass the original test set made by problem authors, and any unit test associated with a successful hack is immediately added to the final test set.

Unfortunately, these distinguished olympiad medalists forgot to actually use the codeforces test cases in their benchmark. Their public test set contains a completely different set of cases, which allow some incorrect solutions to pass.1

Terminal-Bench 2 Audit

I was curious just how widespread such issues were, and how good modern LLMs were at detecting them. I decided to run an LLM based audit of Terminal-Bench 2.0.

Terminal-Bench 2.0 is a harder, better verified version Terminal-Bench. We conducted substantial manual and LM-assisted verification of the dataset to ensure that the tasks were of the highest possible quality. Several labs and data vendors have commented that these are some of the highest quality environments they have seen.

— Introducing Terminal Bench 2 and Harbor

The authors of Terminal-Bench 2 put an impressive amount of work into auditing their benchmark. Each task averaged three hours of human review. Furthermore, they prompted an adversarial agent to attempt to cheat on each of the tasks, in order to discover potential reward hacks.

Still, they “acknowledge that [their] benchmark may still have flaws.”

I prompted Claude Opus 4.52 with each task’s instructions, files, oracle solution, and test cases, and asked it to rate test coverage on a 1 to 5 scale. In my judgement, tasks it rated a 4 or a 5 were generally fine, whereas those it rated 1-3 had genuine issues.

The full results of my audit are available here, and my notes on tasks it rated 1-3 here.

Claude rated fourteen tasks a 3 and one task a 2. I manually reviewed these tasks, and determined that two of them were actually false positives.3

Claude’s lowest rating went to a task called fix-git. In this task, certain changes to a website have been lost in an orphaned commit, and the agent must find and merge them back into master.

The issue Claude found is: updated versions of the target files are already present in the master branch, visible to the agent in a folder called /resources/patch_files4. So an agent could theoretically notice these files, deduce that they were probably the target versions, and copy them back into the website’s repository. This approach would pass the test cases, which only verify file contents and don’t bother to check if any merge has actually occurred.

In another task, regex-log, the oracle solution violates the instructions. In particular, it incorrectly matches IP addresses with leading 0s in an octet, so long as the octet is two digits long. The tests do not check any cases involving leading 0s.

Claude wasn’t perfect. It gave a rating of 3 to two tasks which I believe have sufficient test coverage. In regex-chess, it incorrectly thought certain edge cases were not covered, when they in fact were5. In extract-moves-from-video, it complained that the tests only checked for success at a 90% threshold, even though this threshold was specified in the task instructions.

Finally, one of the tasks is…well…

“Invalid prompt: your prompt was flagged as potentially violating our usage policy”The prompt talks about “stealing” neural network weights, which triggered OpenAI’s content moderation. This prevented the model from ever properly engaging with the task.

—Claude

Why does this matter?

There are a few reasons.

First, benchmarks are often used to evaluate experimental new techniques. I recently attended a Q+A w/ Prof. Dan Fried, where I asked about the most common failure modes of an agentic system he was developing. And while it was unclear whether this was the most common failure mode, the first thing he mentioned was errors in environments themselves.

Every few months, someone announces that they’ve developed an AI that improves KernelBench scores by like 20x or something. And every time, well…6

Second, errors in benchmarks may lead to over or under estimation of AI capabilities. This has implications for forecasting.

Third, issues with benchmarks make it hard to build on top of them. When I was working on EvilGenie, issues with LiveCodeBench (incorrect/insufficient test cases) caused frequent headaches (though they also surfaced some interesting model behavior).

Fourth, RL training environments are quite similar to benchmarks — there’s a reason o3 reward hacks so much. By fixing benchmarks, we learn how to fix environments, leading to models which are more broadly aligned.

What to do about it

Making benchmarks is hard. I have a deep respect to anyone who has worked on a widely used benchmark.

Here are a few approaches the community can take to reduce the number of errors in benchmarks.

AI audits. The audit I describe above did not take me too long, and I believe the infrastructure for performing such audits can be scaled. Fulcrum’s Lunette is one such system.7

Fine version control. While many benchmarks have released new versions, these versions often contain entirely new tasks (to increase difficulty or reduce contamination). It would be cool if in a few days, we could see a Terminal-Bench 2.1, which simply fixes the issues found by the audit. Computing new scores would be simple, as models would only need to be rerun on the updated tasks. Indeed, in some ways, benchmarking is like software development — it’s an unreasonable expectation that a benchmark completely bug free upon its release. Instead, we should take inspiration from the open source software community, with the expectation that anyone can submit a bug or a patch.

Peer review When a benchmark paper is submitted to a conference, sample data should be required, and reviewers should be encouraged to spend time directly auditing the data. This would be much more valuable than what reviewers are currently doing, largely ad hoc decisions about the originality of the benchmark and quality of the methods used in its creation. Of course, a downside of this approach is it is hostile to private benchmarks that want to avoid any possibility of contamination. But perhaps the standard for such cases can be to include both a public and private set, as is the case with ARC-AGI.

Increase community support for benchmark maintenance. Right now, researchers will often develop a benchmark, perhaps fix some issues in it at first, but eventually leave it to rot. By adding social and financial incentives, we can increase the effort put into maintaining benchmarks.

Appendix: More benchmark issues

SWE-Bench Verified is possibly the most widely used coding benchmark. Fulcrum has discovered an array of issues in the tasks. Furthermore, there used to be an issue where models could see future commits.

EpochAI found that success in computer-use benchmark OSWorld “often hinges on interpreting ambiguous instructions”.

METR recently determined that Sonnet 4.5 was reward hacking on one of their tasks:

The authors of GSO, a performance engineering benchmark, observe frequent reward hacking. Indeed, over 50% of o3’s “solutions”, and all of Gemini-2.5 Pro’s, were actually reward hacks.

It’s possible that their official leaderboard uses the codeforces tests. However, given that model developers likely use the public tests to do their own benchmarking, I feel this ought to be clearly specified.

In fairness to the Terminal-Bench authors, Claude Opus 4.5 had not yet been released during benchmark creation

Another three I felt I didn’t have the expertise to properly vet. If you have the relevant knowledge, I’d love your input!

These files are used in testing to verify that the agent’s merge was correct

Admittedly in a way that’s hard to see at first

DeepReinforce has a good overview of the vulnerabilities in KernelBench (scroll down to the section on reward hacking).

COI notice: I am currently a winter research fellow at Fulcrum

As someone who maintains benchmarks for a living, I think you're severely underrating the difficultly/complexity of constantly updating tasks. I think there's a fundamental tension with making frequent benchmark updates, and in results being comparable over time. It's a huge pain to re-run old models, often old models served from labs are deprecated, and running complex agent benchmarks is already very time-consuming/difficult. There's a sense in which the whole *point* of a benchmark is to be a static set of tasks that people can use to compare results across time and space, and if it's changing constantly you're hitting a moving target, which dilutes one of the main benefits of having this static set.

Otherwise totally agree with your points here—we should use LLM review and peer reviewers (and benchmark creators themselves, although it's sad that I have to say this) should actually look at the data!

I think there's also just really wide variance in benchmark quality—HLE is wayyyyy worse than most of the other benchmarks you listed, for example, because they didn't do substantive human expert review/validation of question correctness.

Solid audit work here. The regex-log octet issue is such a perfect example of how easy it is for edge cases to slip through even with thorough review. I've definately run into similiar headaches trying to build on top of flawed benchmarks, and it's frustrating how much time gets wasted debugging issues that aren't even in your own code. The open source software comparison is spot on tbh.