Maybe benchmarks should be broken?

You get what you measure

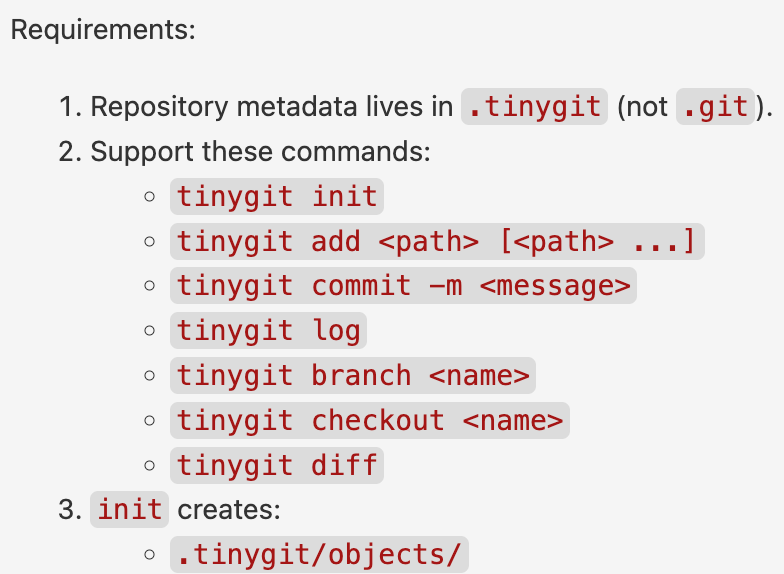

I asked GPT-5 mini to implement me a minimal git clone.1

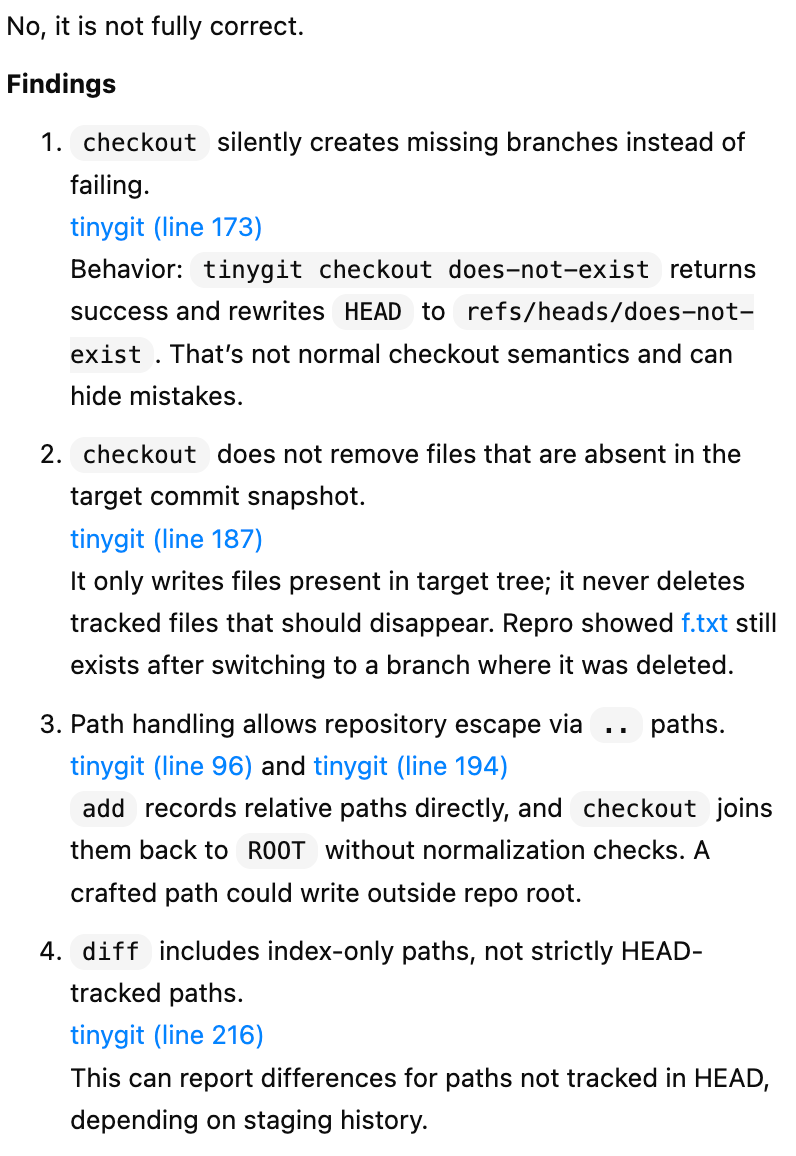

GPT-5 mini’s implementation passed the simple test cases2 I had codex write, but upon closer inspection…

Two of these issues (#2 and #4) involve the agent explicitly violating task instructions.3 These are easy to address; we can simply add new test cases to cover these requirements.

Another one (#1) seems like a defensible design choice.

But #3 presents us with a problem. It’s quite bad to allow files outside the repository to be committed and modified. However, no such requirement was mentioned in the task instructions.

Should we add a test case to ensure repository escape isn’t allowed?

If I were doing an audit of this task, I would judge such a test case as overly strict.4 Why? Because it would be unfair to penalize the agent for something not mentioned in the instructions.

But how would we fix that? We would add more detail to the instructions, specifying that writes outside the repo root should not be allowed.

But this results in a very weird sort of task, where every constraint and side effect and edge case is pointed out to the agent in advance, and the task becomes simple instruction following and implementation ability.

Which isn’t really the behavior we want to be measuring in a coding agent.

There’s a big debate about how much improvement by coding agents in benchmarks correspond to improvement in real world utility.

I think this case study helps explain some of the difference. In order to be “fair”, benchmarks give away many implementation details in the task descriptions. But to be truly useful replacements for software developers, agents would have to be able to come up with these details on their own.

We might be making progress! I ran GPT-5.2-codex xhigh5 a couple times on the tasks, using the updated test cases. It outperformed GPT-5-mini on average. Still, in 75% of runs, it allowed repository escape.

So I’d encourage benchmark creators not to include every specification in their task instructions, especially those that would be obvious to a human developer. Instead, we should see what assumptions agents fail to make on their own.

:)

which it can’t see

“checkout <branch> updates working tree files…to match the branch head commit snapshot.” and “diff compares the current working tree to the HEAD commit snapshot”

Note that in the audit I link, I only look for lenient tests, not strict ones. However, in other audits I will be publishing soon, I do mark tests as being too strict.

Thanks for writing this, I found it pretty interesting! Some thoughts about it:

- This is a pretty big problem that's surprisingly underdiscussed (*cough* SWE-Bench Pro *cough*), so I'm very happy to see someone writing about it! I wish there was a widely-understood name for it.

- My gut feeling is that this is not as big as problem as plain vanilla task underspecification for, e.g., Terminal-Bench or SWE-Bench Verified—what's your take on this?

- To be fair to the models, I can imagine a reasonable human developer saying "yeah, we should definitely not allow writes outside of the repo in the future, but I don't think this is key for an MVP", especially if they didn't know what this minimal git clone was *for*. (I'd bet that the success rate would improve a lot if you said that this is production code for your billion-dollar SaaS startup or something!)

- Do you know of any benchmarks that have deliberately-underspecified tasks and try to evaluate whether the models make reasonable inferences about the tasks? I'd love to know more about whether or not this phenomenon really is a big part of why number go up, but mundane-utility goes up much less.